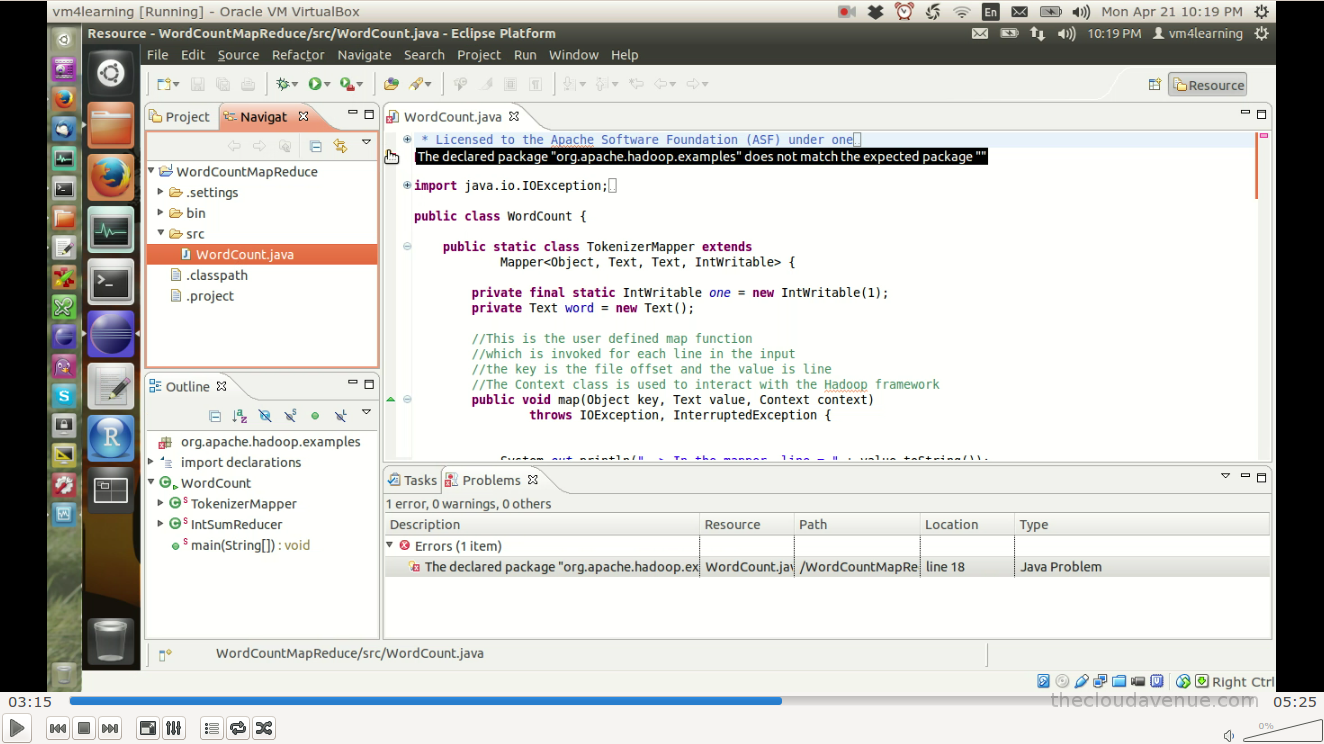

We are looking for interns to work with us on some of the Big Data technologies at Hyderabad, India. The pay would be appropriate. The intern preferably should be from a Computer Science background, be really passionate about learning new technologies and be ready to stretch a bit hard. The intern under our guidance would be performing installing/configuring/tuning of Linux OS and all the way to the Hadoop and related Big Data frameworks on the cluster. Once the Hadoop cluster has been setup, we have got a couple of ideas which we would be implementing on the same cluster.

The immediate advantage is that the intern would be working on one of the current hot technology and would have direct access to us to know/learn more about Big Data. Also, based on the requirement appropriate training would be given around Big Data. Also, the work being done by the intern will definitely help in getting them through the different Cloudera Certifications.

BTW, we are looking for someone who can work with us full time and not part time. If you or anyone you know is interested in taking an internship please send an email with CV at praveensripati@gmail.com.

The immediate advantage is that the intern would be working on one of the current hot technology and would have direct access to us to know/learn more about Big Data. Also, based on the requirement appropriate training would be given around Big Data. Also, the work being done by the intern will definitely help in getting them through the different Cloudera Certifications.

BTW, we are looking for someone who can work with us full time and not part time. If you or anyone you know is interested in taking an internship please send an email with CV at praveensripati@gmail.com.