HBase's Put API can be used to insert the data into HDFS, but the data has to go through the complete HBase path as explained here. So, for inserting the data in bulk into HBase using the Put API is lot slower than the bulk loading option. There are some references to bulk loading (1, 2), but either they are incomplete or a bit too complicated.

For bulk loading of the data into HBase here are steps involved

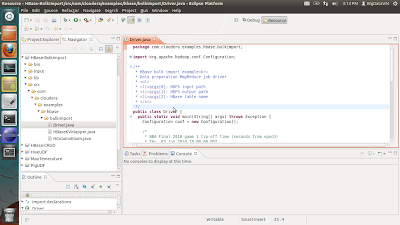

1) Create a project in Eclipse with Driver.java, HBaseKVMapper.java and the HColumnEnum.java programs. These files contain the java MapReduce code for converting the input data into the HFile format.

2) Include the below libraries in the lib folder. The libraries except for joda* and openscv* are part of the the HBase installation.

4) Start HDFS, MapReduce, HBase daemons and make sure they are running properly.

5) Download the input csv data (RowFeeder.csv) and put it in into HDFS.

10) Once the HFiles are created in HDFS, the files can be linked to the NBAFinal2010 using the below command. The first argument is the location of the HFile and the final parameter is the table name.

11) Run count 'NBAFinal2010' in HBase and verify that the data has been inserted into HBase.

Note (1st October, 2013) : Here is a detailed blog from Cloudera on the same.

For bulk loading of the data into HBase here are steps involved

1) Create a project in Eclipse with Driver.java, HBaseKVMapper.java and the HColumnEnum.java programs. These files contain the java MapReduce code for converting the input data into the HFile format.

2) Include the below libraries in the lib folder. The libraries except for joda* and openscv* are part of the the HBase installation.

commons-configuration-1.6.jar, commons-httpclient-3.1.jar, commons-lang-2.5.jar, commons-logging-1.1.1.jar, guava-11.0.2.jar, hadoop-core-1.0.4.jar, hbase-0.94.5.jar, jackson-core-asl-1.8.8.jar, jackson-mapper-asl-1.8.8.jar, joda-time-2.2.jar, log4j-1.2.16.jar, opencsv-2.3.jar, protobuf-java-2.4.0a.jar, slf4j-api-1.4.3.jar, slf4j-log4j12-1.4.3.jar, zookeeper-3.4.5.jar3) Make sure that the above mentioned java programs compile properly without any errors in Eclipse. Now export the project as a jar file.

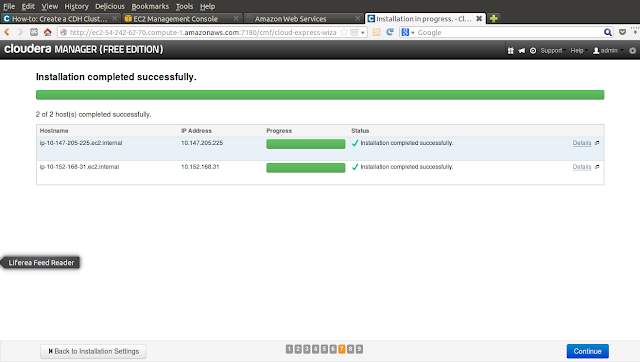

4) Start HDFS, MapReduce, HBase daemons and make sure they are running properly.

5) Download the input csv data (RowFeeder.csv) and put it in into HDFS.

bin/hadoop fs -mkdir input/ bin/hadoop fs -put /home/training/WorkSpace/BigData/HBase-BulkImport/input/RowFeeder.csv input/RowFeeder.csv6) Start the HBase shell and create and alter the table

create 'NBAFinal2010', {NAME => 'srv'}, {SPLITS => ['0000', '0900', '1800', '2700', '3600']}

disable 'NBAFinal2010'

alter 'NBAFinal2010', {METHOD => 'table_att', MAX_FILESIZE => '10737418240'}

enable 'NBAFinal2010'

7) Add the following to the conf/hadoop-env.sh file. This enables the Hadoop client to connect to HBase and get the number of splits.export HADOOP_CLASSPATH=/home/training/Installations/hbase-0.94.5/lib/guava-11.0.2.jar:/home/training/Installations/hbase-0.94.5/lib/zookeeper-3.4.5.jar:/home/training/Installations/hbase-0.94.5/lib/protobuf-java-2.4.0a.jar8) Run the MapReduce job as below to generate the HFiles. The first parameter is the input folder where the csv file is present, then the output folder where the HFiles will be created and the final argument is the table name.

bin/hadoop jar /home/training/Desktop/HBase-BulkImport.jar com.cloudera.examples.hbase.bulkimport.Driver input/ output/ NBAFinal20109) The HFiles should be created in HDFS in the output folder. The number of HFiles maps to the number of splits in the NBAFinal2010 table.

10) Once the HFiles are created in HDFS, the files can be linked to the NBAFinal2010 using the below command. The first argument is the location of the HFile and the final parameter is the table name.

bin/hadoop jar /home/training/Installations/hbase-0.94.5/hbase-0.94.5.jar completebulkload /user/training/output/ NBAFinal2010The above command will automatically update the -ROOT- and the .META. system tables in HBase and should just take a couple of seconds to complete.

11) Run count 'NBAFinal2010' in HBase and verify that the data has been inserted into HBase.

Note (1st October, 2013) : Here is a detailed blog from Cloudera on the same.