In the previous blogs (1, 2), I mentioned about setting up K8S Cluster on laptop for the sake of experimenting. We should be able to connect to the Control Plane/Master instance and execute the kubectl commands to interact with the K8S cluster. For those who are new to K8S or not from technology background it might be a bit intimidating using the different options with kubectl, this is where Lens (K8S IDE) (1, 2) comes into play.

Lens is dubbed as K8S IDE and is a FOSS and can be integrated with multiple K8S Clusters at a time. Depending on the permissions, both Read and Write Operations are allowed on the K8S Cluster. As shown below, I had configured Virtual Machines for the K8S Cluster on the Laptop using VirtualBox.

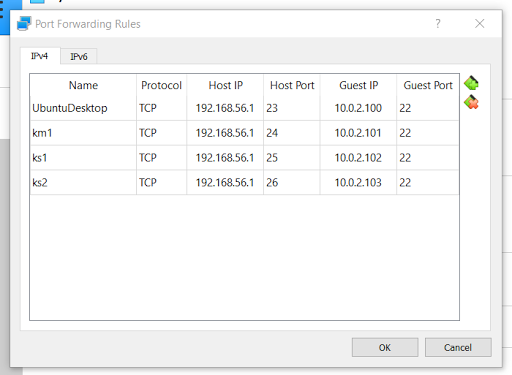

'NAT Networking' was used for the VirtualBox networking as this allows to work in the offline mode, network communication across Virtual Machines and also access to the internet. The only caveat is that there is no direct connectivity form the Host Machines to the Guest Virtual Machines, port forwarding has to be used as mentioned in the documentation here (

1).

Below is how the port forwarding has been configured in the VirtualBox global settings. The Host IP had been left out and will default to localhost. The port 27 from the localhost is pointing to the port 6443 on which K8S API Server is listening to. This is required for the Lens to connect to the K8S Cluster, rest of the rules are for connecting to the Virtual Machin Instances via SSH.

In the Lens, the ".kube/config" file from the K8S Control Plane/Master must be imported during setting up the Cluster. The ".kube/config" file didn't work as-is because port forwarding has been used and the X509 certificates are not valid for the localhost/127.0.0.1 IP address. Had to do two things.

(a) Generate the certificates on the Control Plane/Master as root using the below commands. Note that 10.0.2.101 is the IP address of the K8S Control Plane/Master on which the API Server is running. Thanks to the StackOverflow solution here (

1).

rm /etc/kubernetes/pki/apiserver.*

kubeadm init phase certs all --apiserver-advertise-address=0.0.0.0 --apiserver-cert-extra-sans=10.0.2.101,127.0.0.1

docker rm -f `docker ps -q -f 'name=k8s_kube-apiserver*'`

systemctl restart kubelet

(b) And then modify the config file to point to 127.0.0.1:27, before importing and creating a K8S Cluster in Lens. Note that 27 is the port number configured in the VirtualBox port forwarding rules for the API Server.

Completing the above two steps allowed a connection from the Lens to the K8S API Server which is the single point of interface to the K8S Cluster. It took some time to figure it out, but it was interesting and fun. Below are some of the screens from the Lens around various dimensions.

(Details of the Control Plane/Master)

(Details of the Slave)

(Details of the nodes in the Cluster)

(Details of the Control Plane/Master)

(Overview of the workloads on the Cluster)

(Pods on the Cluster)

(DeamonSets on the Cluster)

(Services on the Cluster)

(Endpoints on the Cluster)

(Namespaces in the Cluster)

Likewise, it's possible to connect to multiple K8S Cluster from Lens and operate on them. Lens is context aware and automatically downloads the correct version of the kubectl from the Google K8S repository.

Conclusion

Lens is a nice K8S IDE and it's a nice way to get started with K8S and also very useful for those who are not that technology savvy to browse around different components in K8S. But, those who are familiar with K8S or have spent good amount of time it's a hinderance and would prefer executing the kubectl command. It's very much like using "vi" vs "notepad" for editing files. With the recent acquisition of Lens by Mirantis (

1), we need to wait how Lens adds to the productivity.

Also, don't get too used to Lens,

CKA and

CKAD certifications don't allow the usage of Lens. Everything has be performed from the command line and one needs to be very familiar with vi/tmux and bunch of command line tools.