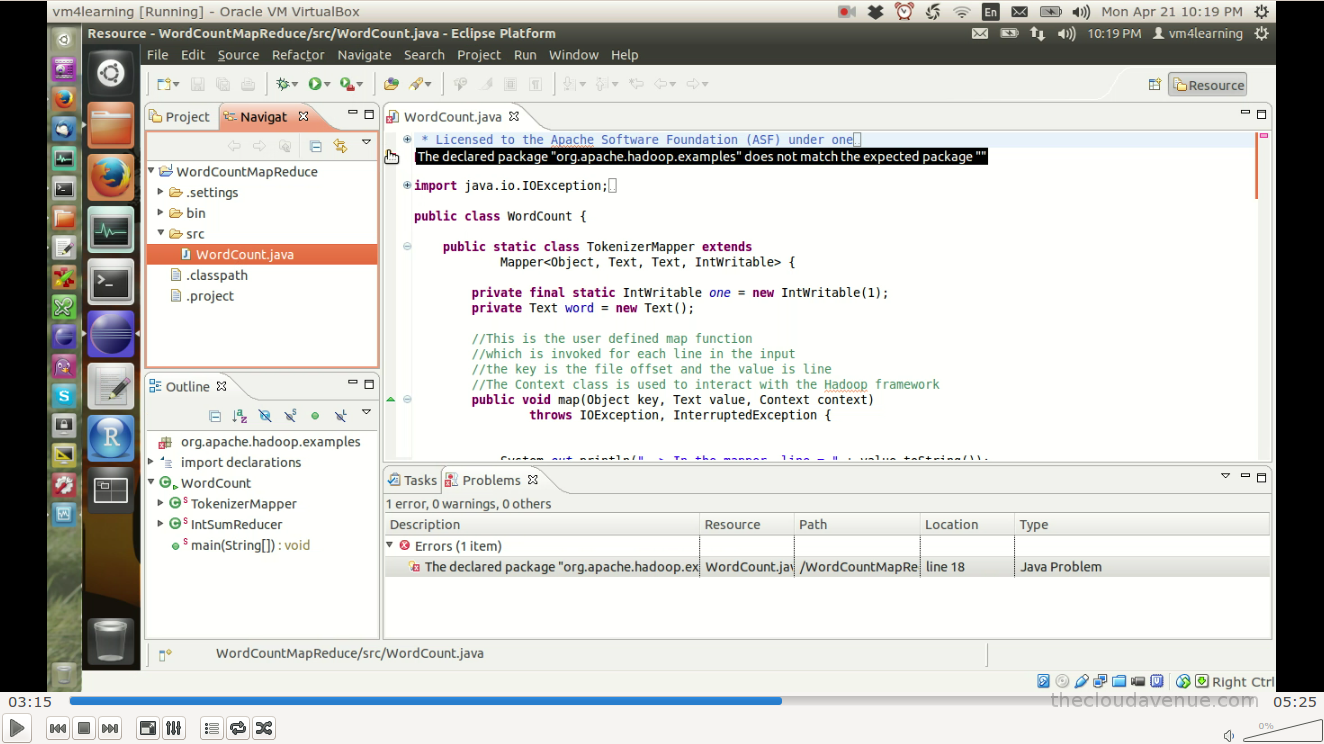

In one of the earlier blog (1), we looked at how to develop MapReduce programs in Eclipse. Here (Develop-MR-Program-In-Eclipse.mp4) is the screen cast for the same. The screencast has been recorded by using Kazam on Ubuntu 14.04 in the mp4 format. For some reason Windows Media Player is not able to play the file, but VLC is able to.

The process in the screencast only work on Linux and is with the assumption that Java and Eclipse have been already installed. Here (1, 2) are the instructions if Java is not installed. Also, Hadoop (hadoop-1.2.1.tar.gz) has to be downloaded from here (1) and extracted to a folder. The code for the WordCount MapReduce Java program can either be written or can be downloaded here (1).

The process in the screencast only work on Linux and is with the assumption that Java and Eclipse have been already installed. Here (1, 2) are the instructions if Java is not installed. Also, Hadoop (hadoop-1.2.1.tar.gz) has to be downloaded from here (1) and extracted to a folder. The code for the WordCount MapReduce Java program can either be written or can be downloaded here (1).

Hi Praveen,

ReplyDeleteI am running CHD 4.5 on single node and I was trying to submit mapreduce job from Eclipse only , rather than creating jar and then executing(its time consuming for map-reduce program development process.

I tried passing following argument (without quote) in Eclipse run configuration

"-jt ubuntu:8021 -fs ubuntu:8020 hdfs://ubuntu:8020/user/hirendra/input/NAMES.TXT hdfs://ubuntu:8020/user/output"

my job is getting submitted to Hadoop cluster , but it get failed by throwing following error...

can you please help me out...

java.io.IOException: Cannot run program "/run/cloudera-scm-agent/process/237-mapreduce-JOBTRACKER/topology.py" (in directory "/run/cloudera-scm-agent/process/237-mapreduce-JOBTRACKER"): java.io.IOException: error=13, Permission denied