In an earlier blog entry we looked at how to start an EMR cluster and submit the predefined WordCount example MR job. This blog details the steps required to submit a user defined MR job to Amazon. We will also look into how to open an ssh tunnel to the cluster master to get the access to the MR and HDFS consoles.

In Amazon EMR, there are three types of nodes. The master node, core node (for task execution and storage) and task node (for execution). We would be creating a master node, push the word count code to it, compile it, create a jar file, push the jar and data file to S3, then finally execute the MR program in the jar file. Note that the data can be in HDFS also.

- As described in the previous blog entry, the first steps is to create the `key pairs` from the EC2 management console and store the pem key file for later logging into the master node. Next create the Access Keys (Access Key ID and the Secret Access Key) in the IAM Management Console.

- Go to the S3 management console and create a bucket `thecloudavenue-mroutput`. Note that the bucket name should be unique, so create some thing else. But, this entry will refer to bucket as `thecloudavenue-mroutput`.

- Go to the EMR management console create a cluster. We would be creating a master node and not run any steps as of now.

- Give a name to the cluster and specify the logs folder location in S3. For some reason the logs are getting shown in S3 with a delay, so it had been a bit difficult to debug the MR jobs.

- Make sure the number of masters is set to 1 and for the core/task set to 0. Since, we would be running simple MR programs with a small dataset multiple nodes are not required. A single node is more than enough.

- Note from the below screen that we are just setting up an EMR cluster and not submitting any jobs yet.

- Once the cluster is up and running, the master instance should be in the running state. This can be observed from the EC2 management console. Note down the Public DNS of the master.

- Install ssh (sudo apt-get install ssh) on the local machine and login to the master. Point to the pem file which was downloaded during the Key Pair creation from the EC2 management console.

- Run the jps command to make sure that the processes are running. In Amazon EMR, there are three types of nodes. The master node, core node (for task execution and storage) and task node (for execution). We would be creating a master node, push the word count code to it, compile it, create a jar file, push the jar and data file to S3, then finally execute the MR program in the jar file. Note that the data can be in HDFS also.

- As described in the previous blog entry, the first steps is to create the `key pairs` from the EC2 management console and store the pem key file for later logging into the master node. Next create the Access Keys (Access Key ID and the Secret Access Key) in the IAM Management Console.

- Go to the S3 management console and create a bucket `thecloudavenue-mroutput`. Note that the bucket name should be unique, so create some thing else. But, this entry will refer to bucket as `thecloudavenue-mroutput`.

- Go to the EMR management console create a cluster. We would be creating a master node and not run any steps as of now.

- Give a name to the cluster and specify the logs folder location in S3. For some reason the logs are getting shown in S3 with a delay, so it had been a bit difficult to debug the MR jobs.

- Make sure the number of masters is set to 1 and for the core/task set to 0. Since, we would be running simple MR programs with a small dataset multiple nodes are not required. A single node is more than enough.

- Note from the below screen that we are just setting up an EMR cluster and not submitting any jobs yet.

- Once the cluster is up and running, the master instance should be in the running state. This can be observed from the EC2 management console. Note down the Public DNS of the master.

- Install ssh (sudo apt-get install ssh) on the local machine and login to the master. Point to the pem file which was downloaded during the Key Pair creation from the EC2 management console.

ssh -i ~/Desktop/emr/thecloudavenue-emr.pem hadoop@ec2-54-211-100-209.compute-1.amazonaws.com

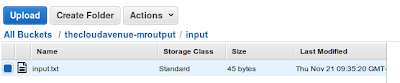

- Go back to the S3 management console and create 3 folders (binary, input and logs).

- Create WordCount.java using vi or some other editor and then compile the code as below.

mkdir wordcount_classes javac -classpath /home/hadoop/hadoop-core-1.0.3.jar -d wordcount_classes WordCount.java jar -cvf /home/hadoop/wordcount.jar -C wordcount_classes/ .- Once the jar has been created, copy it to S3 and verify the same from the S3 management console.

hadoop fs -put /home/hadoop/wordcount.jar s3://thecloudavenue-mroutput/binary/- Create an input.txt file on the local machine and upload it to S3.

- With the cluster up and running, the jar and input data in S3. Now is the time to submit an MR job to the cluster. Add a step and specify the job name, jar location and the additional arguments. The jar doesn't have the main class specified in the manifest file, so the MR driver class has to be specified in the arguments.

- After a couple of minutes the Step should be in the running state.

- The output should appear in the output folder once the job is complete. This can be observed from the S3 management console.

- The cluster would be in Waiting for more jobs to submitted. Submit more jobs or terminate the cluster as shown in the following steps.

Now, we will look into how to access the MR (port 9100 on the master) and HDFS (port 9101 on the master) consoles from the local machine. By default the port for the MR and HDFS consoles running on the master node are blocked for the outside world.

-Create an ssh tunnel to the master.

ssh –i /home/praveensripati/Desktop/emr/thecloudavenue-emr.pem -ND 8157 hadoop@ec2-54-211-100-209.compute-1.amazonaws.com- Download the FoxyProxy addon and configure it as mentioned here. This will make the MR and HDFS console request go through the ssh tunnel to the master and the rest of the requests go as usual.

- Now, with the ssh tunnel established, the MR and HDFS consoles should be accessible from the local machine.

- Now that the job has been completed, now is the time to terminate the cluster and delete the bucket in S3.

As mentioned earlier, AWS makes it easy to setup a Hadoop cluster with worrying about the infrastructure procurement, security, networking and some of the other common concerns required to setup a cluster.

This comment has been removed by the author.

ReplyDelete