In an earlier blog entry, we looked into how easy it was to setup a CDH cluster on Amazon AWS. This blog entry will look into how to use Amazon EMR which is even more easier to get started with Hadoop. Amazon EMR is an PAAS (Platform As A Service) in which Hadoop is already installed/configured/tuned on which applications (MR, Pig, Hive etc) can be built and run.

We will submit a simple WordCount MapReduce program that comes with EMR. AWS has one of the best documentation, but there are some steps missing, this entry will try to fill them and add more details to it. The whole exercise takes less than 0.5 USD, so it should not be much much of a strain to the pocket.

Amazon makes it easy to get started with Hadoop and related technologies. All needed is a Credit Card to fork the money. The good thing is that Amazon has been slashing prices making it more and more affordable to everyone. The barrier to implement a new idea is getting low day by day.

So, here are the steps

- Create an account with AWS here and provide the Credit Card and other details.

- The next step is to goto the EC2 management console and create a Key Pair.

Download the pem file, it will be used along with ssh to login to the Hadoop nodes which we would be creating soon. To create an SSH tunnel to the Hadoop Master node, the same pem file would be required.

- The next step is to generate the Access Keys (Access Key ID and Secret Access Key) in the IAM Management Console. The Access keys also the users to make API calls to the different AWS services, which we are not going to do right now.

- Now create a bucket in S3, which will host the log files and the output data for the simple WordCount MR job. Note that the bucket name should be unique. Also, MR job in EMR can consume data from HDFS also, but in this blog we will put the input/output data in S3.

- Now the fun starts by creating and Hadoop cluster from the EMR Management console.

- The `Configure sample application` option has got a set of samples to get started with EMR very easily.

- Select the `Word Count` sample application and enter the location of the output and the log files. Note to use the bucket name which has been created in the earlier step.

- Use the default options as shown below.

(a)

(e) Make sure that the Auto-terminate is set to yes. Once the Hadoop job has completed, the cluster will automatically terminate or else it has to be done manually.

- Launch the cluster, the status should be in the `Starting` status. Here are the different stages in the life cycle of the cluster.

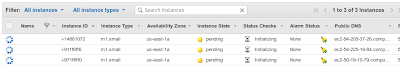

- Going back to EC2 management console, the EC2 instances should be just getting started. The number of EC2 instances should map to the size of the Hadoop cluster.

- After the cluster initializes, the step and the job should be in the running state as shown below. The same can be got from the EMR management console.

- It is also possible to see the status of the different tasks running.

- Finally the job should now move to the completed status, approximately 3 minutes after launching the cluster and cluster should be in the terminated status.

- The output data should be in the S3 bucket as specified in the job specifications.

- Also, the EC2 instances should be terminated once the job has been completed. This can be observred from the EC2 management console.

- AWS charges on a use only basis, so make sure that the data in S3 is deleted manually. S3 doesn't charge much, but still the data is of not use.

In the upcoming blog posts we will look into how to create an ssh tunnel to look into the web consoles provided by Hadoop and also on how to submit a custom job to EMR.

We will submit a simple WordCount MapReduce program that comes with EMR. AWS has one of the best documentation, but there are some steps missing, this entry will try to fill them and add more details to it. The whole exercise takes less than 0.5 USD, so it should not be much much of a strain to the pocket.

Amazon makes it easy to get started with Hadoop and related technologies. All needed is a Credit Card to fork the money. The good thing is that Amazon has been slashing prices making it more and more affordable to everyone. The barrier to implement a new idea is getting low day by day.

So, here are the steps

- Create an account with AWS here and provide the Credit Card and other details.

- The next step is to goto the EC2 management console and create a Key Pair.

Download the pem file, it will be used along with ssh to login to the Hadoop nodes which we would be creating soon. To create an SSH tunnel to the Hadoop Master node, the same pem file would be required.

- The next step is to generate the Access Keys (Access Key ID and Secret Access Key) in the IAM Management Console. The Access keys also the users to make API calls to the different AWS services, which we are not going to do right now.

- Now create a bucket in S3, which will host the log files and the output data for the simple WordCount MR job. Note that the bucket name should be unique. Also, MR job in EMR can consume data from HDFS also, but in this blog we will put the input/output data in S3.

- Now the fun starts by creating and Hadoop cluster from the EMR Management console.

- The `Configure sample application` option has got a set of samples to get started with EMR very easily.

- Select the `Word Count` sample application and enter the location of the output and the log files. Note to use the bucket name which has been created in the earlier step.

(a)

(b)

(c) Select the `Key Pair` which has been created in the earlier step.

(d)

(e) Make sure that the Auto-terminate is set to yes. Once the Hadoop job has completed, the cluster will automatically terminate or else it has to be done manually.

- Launch the cluster, the status should be in the `Starting` status. Here are the different stages in the life cycle of the cluster.

- Going back to EC2 management console, the EC2 instances should be just getting started. The number of EC2 instances should map to the size of the Hadoop cluster.

- It is also possible to see the status of the different tasks running.

- Finally the job should now move to the completed status, approximately 3 minutes after launching the cluster and cluster should be in the terminated status.

- The output data should be in the S3 bucket as specified in the job specifications.

- Also, the EC2 instances should be terminated once the job has been completed. This can be observred from the EC2 management console.

In the upcoming blog posts we will look into how to create an ssh tunnel to look into the web consoles provided by Hadoop and also on how to submit a custom job to EMR.

This comment has been removed by the author.

ReplyDelete