Edit: For easier access I have moved this to the pages section just below the blog header and no more maintaining this entry.

'Next Genereation MR' or 'NextGen MR' or 'MRv2' or 'MR2' is a major revamp of the MapReduce engine and will part of the 0.23 release. MRv1 or the old MapReduce engine will be not be supported in 0.23 release. The underlying engine has been revamped in 0.23, but the API to interface with the engine remains the same. So, the existing MapReduce code for MRv1 engine should run without modifications on MRv2.

The architecture, information for building and running MRv2 is spread across and this blog entry will try to consolidate and present all the information available on MRv2. I will keep-on updating this blog entry as I get more information about MRv2, instead of creating a new one. So, bookmark this and check it often :).

Current Status

http://www.hortonworks.com/update-on-apache-hadoop-0-23/ - 27th September, 2011

http://www.cloudera.com/blog/2011/11/apache-hadoop-0-23-0-has-been-released/ - 15th November, 2011

http://hortonworks.com/apache-hadoop-is-here/ - 16th November, 2011

Home Page

http://hadoop.apache.org/common/docs/r0.23.0/

Architecture

The Hadoop Map-Reduce Capacity Scheduler

The Next Generation of Apache Hadoop MapReduce

Next Generation of Apache Hadoop MapReduce – The Scheduler

Detailed document on MRv2

Presentation

Quick view of MRv2

JIRAs

https://issues.apache.org/jira/browse/MAPREDUCE-279

Videos

Next Generation Hadoop MapReduce by Arun C. Murthy

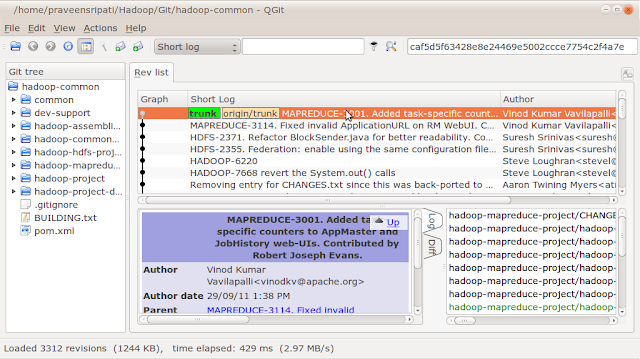

Code

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/

Building from code and executing and running a sample

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/BUILDING.txt

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/hadoop-mapreduce-project/INSTALL

'Next Genereation MR' or 'NextGen MR' or 'MRv2' or 'MR2' is a major revamp of the MapReduce engine and will part of the 0.23 release. MRv1 or the old MapReduce engine will be not be supported in 0.23 release. The underlying engine has been revamped in 0.23, but the API to interface with the engine remains the same. So, the existing MapReduce code for MRv1 engine should run without modifications on MRv2.

The architecture, information for building and running MRv2 is spread across and this blog entry will try to consolidate and present all the information available on MRv2. I will keep-on updating this blog entry as I get more information about MRv2, instead of creating a new one. So, bookmark this and check it often :).

Current Status

http://www.hortonworks.com/update-on-apache-hadoop-0-23/ - 27th September, 2011

http://www.cloudera.com/blog/2011/11/apache-hadoop-0-23-0-has-been-released/ - 15th November, 2011

http://hortonworks.com/apache-hadoop-is-here/ - 16th November, 2011

Home Page

http://hadoop.apache.org/common/docs/r0.23.0/

Architecture

The Hadoop Map-Reduce Capacity Scheduler

The Next Generation of Apache Hadoop MapReduce

Next Generation of Apache Hadoop MapReduce – The Scheduler

Detailed document on MRv2

Presentation

Quick view of MRv2

JIRAs

https://issues.apache.org/jira/browse/MAPREDUCE-279

Videos

Next Generation Hadoop MapReduce by Arun C. Murthy

Code

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/

Building from code and executing and running a sample

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/BUILDING.txt

http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.23/hadoop-mapreduce-project/INSTALL