As mentioned in the official K8S documentation (1), there are different ways of setting up K8S, some used for learning purpose and some for production setup. Same is the case, there are different ways of setting up K8S on AWS (1). Today we will explore setting up using the eksctl way (1) which is kinda easy. There are lot of tools which make the K8S installation easier, but if you are looking on how to build from scratch then K8S the hard way (1) is the way to go. This will also help with the CKA certification (1).

As of this writing AWS EKS charges $0.20 per hour for each Cluster created, this is independent of the worker nodes in the Cluster. And there is a separate charge for EC2 and EBS for the worker nodes. As the Cluster charges are flat, there is no way to optimize it. So, I used a t2.micro for the worker nodes to optimize the cost. But, it didn't work out as t2.micro supports a maximum of 2 network interfaces and 2 IPv4 addresses per network interface (1). This boils to the fact that t2.micro can have maximum of 4 IP addresses.

Whenever a new Pod is created on the worker node, AWS allocates a new IP address from the VPC subnet. As mentioned above, t2.micro can have a maximum of 4 IP address, one of which is attached to the EC2 itself. There are 3 more IP address left, which can be allocated to the Pods on the worker nodes. This makes is difficult to use the t2.micro EC2 Instance which falls under the AWS free tier, as some of the pods are used by K8S installation itself. The below steps would be using a single EC2 Spot Instance (t3.small or t2.medium) for the worker nodes.

Also, AWS has recently introduced managed Node Groups (1). With this most of the grunt work like upgrading K8S on the worker nodes is managed by AWS with no additional cost. So, we have node-groups which have to be managed by the customer and the new managed-node-groups which are managed by AWS.

Node-groups had been there for some time and support EC2 Spot Instances, but managed-node-groups is relatively new and looks like it doesn't support the EC2 Spot Instances as of now.

Here are the steps for creating an AWS EKS Cluster using the eksctl. References (1, 2, 3)

Step 1: Create an Ubuntu EC2 Instance (t2.micro) and connect to it. On this Instance we would be running the eksctl and other commands for creating the AWS EKS Cluster.

Step 2: Execute the below commands on Ubuntu to create key pairs and install AWS CLI, aws-iam-authenticator, kubectl and eksctl softwares.

#generation of ssh keypairs to be used by the worker K8S Instances

ssh-keygen -f .ssh/id_rsa

#installation of the required software

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y python3-pip kubectl

curl -o aws-iam-authenticator https://amazon-eks.s3-us-west-2.amazonaws.com/1.14.6/2019-08-22/bin/linux/amd64/aws-iam-authenticator

chmod +x ./aws-iam-authenticator

sudo mv ./aws-iam-authenticator /usr/local/bin

pip3 install awscli --upgrade

export PATH="$PATH:/home/ubuntu/.local/bin/"

curl --silent --location "https://github.com/weaveworks/eksctl/releases/download/latest_release/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

Step 3: Get the access keys for the root and provide them using the `aws configure` command.

Step 4: Create a cluster.yaml file for a nodegroup or managed nodegroup and start creating the Cluster. The yaml configuration file for both of them has been mentioned below. It would take 10-15 minutes time. This configuration will use existing VPC, note to change the availability zone and subnet-id in the yaml to where the worker nodes have to be deployed.

eksctl create cluster -f cluster.yaml

Check the number of nodes in the cluster.

kubectl get nodes

Once the cluster has been created the below command can be used to login to each of the worker nodes. Make sure to replace the EC2 IP of the worker nodes.

ssh -i ./.ssh/id_rsa ec2-user@3.81.92.65

Step 5: Create a deployment with 2 ngnix pods and get the pod details.

kubectl run nginx --image=nginx -r=2

kubectl getpods -o wide

Step 6: Delete the Cluster. Again, the deletion of the Cluster would take 10-15 minutes of time. The progress would be displayed in the console.

eksctl delete cluster --wait --region=us-east-1 --name=praveen-k8s-cluster

Step 7: Make sure to terminate the EC2 Instance created in Step 1.

# An example of ClusterConfig showing nodegroups with spot instances

# An example of ClusterConfig showing managed nodegroups with spot instances

1. eksctl uses CloudFormation templates to create the EKS Cluster and the NodeGroup. The status of the Stack creation can be monitored from the CloudFomation Management Console.

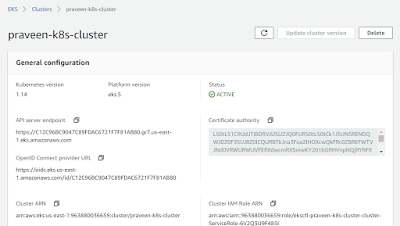

2. The above mentioned CloudFormation templates create a EKS Cluster and the NodeGroup as shown in the below EKS Management Console.

3. One of the EC2 Instance was created in Step 1. Other one was created by eksctl for the NodeGroup worker nodes.

4. Interaction with the Cluster using kubctl to

- get the nodes

- create a deployment and get the list of pods

5. Finally, deletion of the EKS Cluster and the NodeGroup.

As shown above eksctl provides an easy way to create a K8S Cluster in the AWS easily. The same thing can be done with the AWS Management Console also (1). Doing with the AWS Management Console is more of a manual way, which gives us clarity on the different resources getting created and how they interact with each other.

As of this writing AWS EKS charges $0.20 per hour for each Cluster created, this is independent of the worker nodes in the Cluster. And there is a separate charge for EC2 and EBS for the worker nodes. As the Cluster charges are flat, there is no way to optimize it. So, I used a t2.micro for the worker nodes to optimize the cost. But, it didn't work out as t2.micro supports a maximum of 2 network interfaces and 2 IPv4 addresses per network interface (1). This boils to the fact that t2.micro can have maximum of 4 IP addresses.

Whenever a new Pod is created on the worker node, AWS allocates a new IP address from the VPC subnet. As mentioned above, t2.micro can have a maximum of 4 IP address, one of which is attached to the EC2 itself. There are 3 more IP address left, which can be allocated to the Pods on the worker nodes. This makes is difficult to use the t2.micro EC2 Instance which falls under the AWS free tier, as some of the pods are used by K8S installation itself. The below steps would be using a single EC2 Spot Instance (t3.small or t2.medium) for the worker nodes.

Also, AWS has recently introduced managed Node Groups (1). With this most of the grunt work like upgrading K8S on the worker nodes is managed by AWS with no additional cost. So, we have node-groups which have to be managed by the customer and the new managed-node-groups which are managed by AWS.

Node-groups had been there for some time and support EC2 Spot Instances, but managed-node-groups is relatively new and looks like it doesn't support the EC2 Spot Instances as of now.

Here are the steps for creating an AWS EKS Cluster using the eksctl. References (1, 2, 3)

Step 1: Create an Ubuntu EC2 Instance (t2.micro) and connect to it. On this Instance we would be running the eksctl and other commands for creating the AWS EKS Cluster.

Step 2: Execute the below commands on Ubuntu to create key pairs and install AWS CLI, aws-iam-authenticator, kubectl and eksctl softwares.

#generation of ssh keypairs to be used by the worker K8S Instances

ssh-keygen -f .ssh/id_rsa

#installation of the required software

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y python3-pip kubectl

curl -o aws-iam-authenticator https://amazon-eks.s3-us-west-2.amazonaws.com/1.14.6/2019-08-22/bin/linux/amd64/aws-iam-authenticator

chmod +x ./aws-iam-authenticator

sudo mv ./aws-iam-authenticator /usr/local/bin

pip3 install awscli --upgrade

export PATH="$PATH:/home/ubuntu/.local/bin/"

curl --silent --location "https://github.com/weaveworks/eksctl/releases/download/latest_release/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

Step 3: Get the access keys for the root and provide them using the `aws configure` command.

Step 4: Create a cluster.yaml file for a nodegroup or managed nodegroup and start creating the Cluster. The yaml configuration file for both of them has been mentioned below. It would take 10-15 minutes time. This configuration will use existing VPC, note to change the availability zone and subnet-id in the yaml to where the worker nodes have to be deployed.

eksctl create cluster -f cluster.yaml

Check the number of nodes in the cluster.

kubectl get nodes

Once the cluster has been created the below command can be used to login to each of the worker nodes. Make sure to replace the EC2 IP of the worker nodes.

ssh -i ./.ssh/id_rsa ec2-user@3.81.92.65

Step 5: Create a deployment with 2 ngnix pods and get the pod details.

kubectl run nginx --image=nginx -r=2

kubectl getpods -o wide

Step 6: Delete the Cluster. Again, the deletion of the Cluster would take 10-15 minutes of time. The progress would be displayed in the console.

eksctl delete cluster --wait --region=us-east-1 --name=praveen-k8s-cluster

Step 7: Make sure to terminate the EC2 Instance created in Step 1.

# An example of ClusterConfig showing nodegroups with spot instances

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: praveen-k8s-cluster

region: us-east-1

vpc:

subnets:

public:

us-east-1a: { id: subnet-32740f6e }

us-east-1b: { id: subnet-78146a1f }

us-east-1c: { id: subnet-16561338 }

nodeGroups:

- name: ng-1

ssh:

allow: true

minSize: 1

maxSize: 2

instancesDistribution:

instanceTypes: ["t3.small", "t3.medium"]

onDemandBaseCapacity: 0

onDemandPercentageAboveBaseCapacity: 0

spotInstancePools: 2

# An example of ClusterConfig showing managed nodegroups with spot instances

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

vpc:

subnets:

public:

us-east-1a: { id: subnet-32740f6e }

us-east-1b: { id: subnet-78146a1f }

us-east-1c: { id: subnet-16561338 }

metadata:

name: praveen-k8s-cluster

region: us-east-1

managedNodeGroups:

- name: managed-ng-1

instanceType: t3.small

minSize: 1

maxSize: 1

desiredCapacity: 1

volumeSize: 20

ssh:

allow: true

Screen shots from the above sequence of steps

1. eksctl uses CloudFormation templates to create the EKS Cluster and the NodeGroup. The status of the Stack creation can be monitored from the CloudFomation Management Console.

2. The above mentioned CloudFormation templates create a EKS Cluster and the NodeGroup as shown in the below EKS Management Console.

3. One of the EC2 Instance was created in Step 1. Other one was created by eksctl for the NodeGroup worker nodes.

4. Interaction with the Cluster using kubctl to

- get the nodes

- create a deployment and get the list of pods

5. Finally, deletion of the EKS Cluster and the NodeGroup.

Conclusion

As shown above eksctl provides an easy way to create a K8S Cluster in the AWS easily. The same thing can be done with the AWS Management Console also (1). Doing with the AWS Management Console is more of a manual way, which gives us clarity on the different resources getting created and how they interact with each other.

No comments:

Post a Comment