For those who are interested in Hadoop, but are stuck with a Windows machine or have inertia to install Linux for different reasons, here are some alternatives.

a) Install Hadoop on Windows using Cygwin.

b) Wait for Microsoft to come out of the Hadoop preview.

c) Use Amazon EMR. Amazon has very clear documentation.

We would be going through the last option of using CDH image from Cloudera in detail. CDH documentation can be found here.

The prerequisite for this is the installation of VirtualBox on the target machine. VirtualBox can be downloaded from here and here are the detailed instructions for installing VirtualBox. Also, an virtual image of CDH for VirtualBox has to be downloaded and unzipped. The downloaded file name would be similar to cloudera-demo-vm-cdh3u2-virtualbox.tar.gz.

Lets get started.

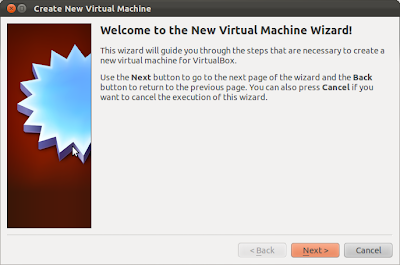

Step 1) Start VirtualBox and click on New (Ctrl-N).

Step 2) Click on Next.

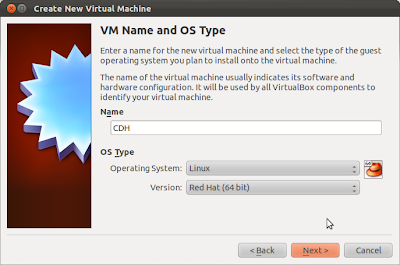

Step 3) Select the options as below. Make sure to select `Red Hat (64 bit)` as CentOS is based on Red Hat and that the CDH image is 64 bit. Click on Next.

Step 4) Cloudera documentation says `This is a 64-bit image and requires a minimum of 1GB of RAM (any less and some services will not start). Allocating 2GB RAM to the VM is recommended in order to run some of the demos and tutorials`. Was able to run the Hadoop example with 1536 MB. Note that the memory allocated to the Guest OS can be changed later from within VirtualBox.

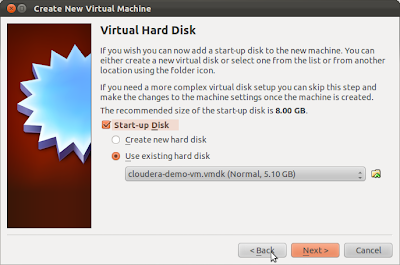

Step 6) Select `Use existing hard disk` and choose the CDH image unzipped earlier and click on Next.

Step 7) Make sure all the details are correct in the summary screen and click on Create.

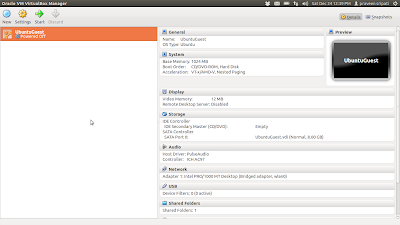

Step 8) The VirtualBox home screen should look like below. Select CDH on the left pane and click on Start.

Step 9) After a couple of moments, the virtual image would have started along with all the daemons.

Step 10) To verify that the Hadoop daemons started properly, check that the number of TaskTracker nodes at http://localhost:50030/

Also, the output of the `sudo /usr/java/default/bin/jps` command should be as below

2914 FlumeMaster

3099 Sqoop

2780 FlumeWatchdog

2239 JobTracker

2850 FlumeWatchdog

2919 FlumeNode

2468 SecondaryNameNode

2019 HMaster

3778 Jps

2145 DataNode

2360 NameNode

2964 RunJar

3076 Bootstrap

2568 TaskTracker

3099 Sqoop

2780 FlumeWatchdog

2239 JobTracker

2850 FlumeWatchdog

2919 FlumeNode

2468 SecondaryNameNode

2019 HMaster

3778 Jps

2145 DataNode

2360 NameNode

2964 RunJar

3076 Bootstrap

2568 TaskTracker

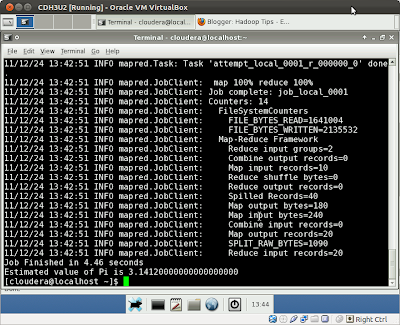

Step 11) Open a terminal and run the below command

hadoop --config $HOME/hadoop-conf jar /usr/lib/hadoop/hadoop-0.20.2-cdh3u2-examples.jar pi 10 10000

On the successful completion of the Job the output should look as below.

Note:

1) Some of the processors support Intel-VT and AMD-V for Hardware virtualization support. Most of the times this is disabled in the BIOS and has to be explicitly turned on.

2) Flume, Sqoop, Hive and Hue are also started besides the core Hadoop. These can be disabled by removing the x permissions for the files in /etc/init.d.

3) For purpose of stability/security the patches on CentOS can be updated using `sudo yum update` command.

4) For better performance and usability if the Guest (CentOS) VirtualBox Guest Additions have to be installed.

5) Hue (Web UI for Hadoop) can be accessed from http://localhost:8088/.

Its like you read my mind! You seem to know so much about this, like you wrote the book in it or something. I think that you could do with some pics to drive the message home a little bit, but other than that, this is fantastic blog. A fantastic read. I’ll definitely be back.

ReplyDeleteHadoop Training in hyderabad